Roadblock Recon:

Barriers to Customer Research

An exploratory research project for Lyssna (FKA UsabiltyHub), a SaaS company specializing in research tools and platforms.

Background & Goals

With strong indications that participant recruitment and management are significant sources of friction, hindering organizations’ ability to conduct and scale research with its users, UsabilityHub hypothesized that a participant management tool would provide deeper and longer-term value to customers and support the democratization of research.

1.

To determine to what degree recruitment and management causes friction in the research process.

What are the barriers to conducting research, generally and more specifically with users? What are the motivations for developing a participant pool?

2.

To understand the range of mental models around participant pools (PP) and PP management solutions.

How do people who do research think of participant pools & management tools [mental models]? What top features would they need in a tool? How do they expect it to function?

3.

To gain perspective on participant pool development journeys across a range of industry types.

What kind of thought, investigation, or planning is done in the participant pool consideration & development process?

Team

My colleague, Miaoxin Wang, and I investigated the same research challenge, each from a different viewpoint. The Director of Product at Usability Hub was the managing client stakeholder, and Michele Rosen was our consultancy manager

Impact

As this was the initial discovery research, there aren't measurable results yet, but the stakeholders' sentiment was overwhelmingly positive. In correspondence with the Director of Product following the research presentation, they shared that "we've already started putting your findings into action and are excited about the opportunities."

Due to confidential agreement, only research strategy and process are presented. Detailed findings and insights are intentionally omitted from the case study.

"Incredible work! You've absolutely blown everyone out of the water, so impressed!"

— Managing Stakeholder, Director of Product, Lyssna [UsabilityHub]

“Your work and your presentation rocked!”

-Michele Ronsen, Mentor & Founder, Curiosity Tank

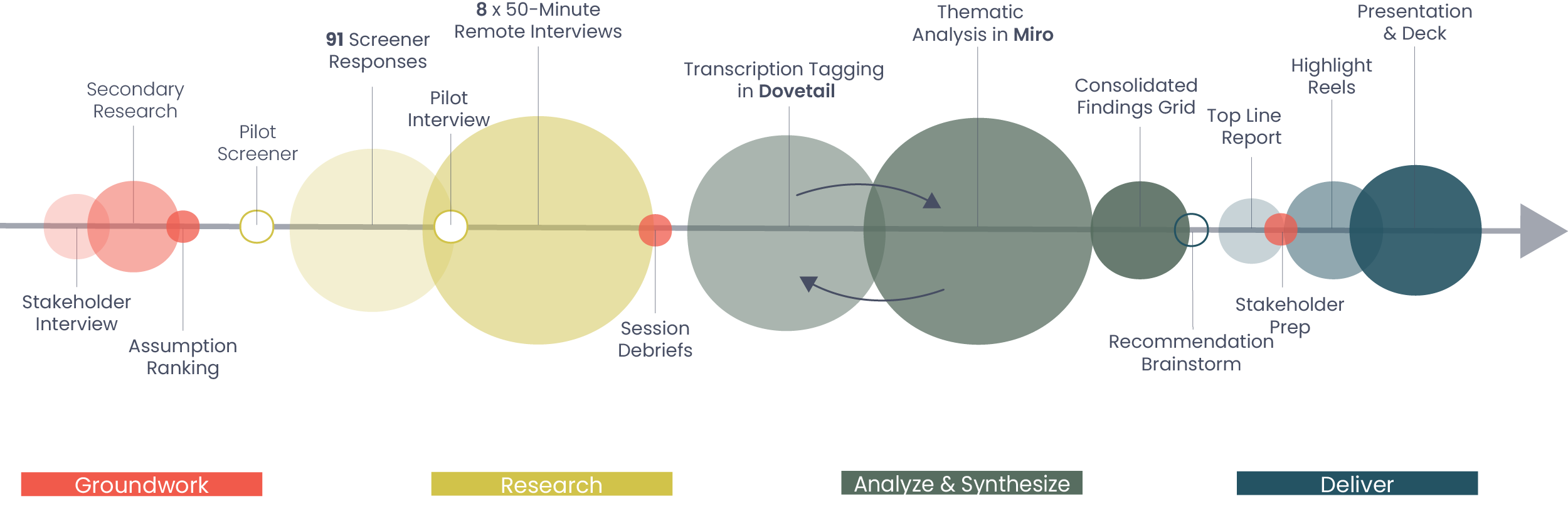

Process

Laying the Groundwork

Research motivations and context were explored through initial stakeholder meetings to set expectations and ensure the study’s strategy aligned with business goals.

Hypotheses & assumptions were identified, compiled, and ranked by impact and risk in order to prioritize.

Subscriber demographic data, particularly industry, roles, and organization size, were requested to get a pulse on UsabilityHub’s current subscriber base and inform segmenting.

High-level secondary research was conducted, exploring third-party PP management tools and published expert advice on the topic of PP recruiting and management.

Segmentation strategy determined through secondary research and informal query.

Transcription Tagging Visualization from Dovetail

Participant Recruitment

An online screener survey aimed at identifying individuals in organizations considering or on the brink of developing a PP was designed in Survey Monkey using logic. It was distributed to a segment of UH customers and through UXD/SXD, Product Management, Design Thinking, and User Research groups.

91 Survey Responses

38 UXR | 20 UXD | 31 Other

50% do not have a pool

43% of without a pool looking to build

Sample Survey Questions

💡The screener not only served to recruit interview participants, but also provided smoke signals that informed the interview discussion guide.

Remote Interviews

8 x 50-minute interviews

Participants distributed across two segments—4 UX Researchers & 4 non-dedicated researchers (NDRs).

A wide-variety of industries and geographic locations represented globally.

Distilling the Data

After detailed note-taking and tagging in Dovetail, I synthesized the data through thematic analysis. The analysis strategy mapped to key learning objectives and evolved through breakout patterns found while iteratively working with the raw data.

3 Affinity Maps: Barriers/Pain Points; Journey; Mental Models

2 Frameworks: Must Have/Nice to Have and Gains/Risk

Plot Graph: UX Maturity vs Type of Research Friction

Empathy Map

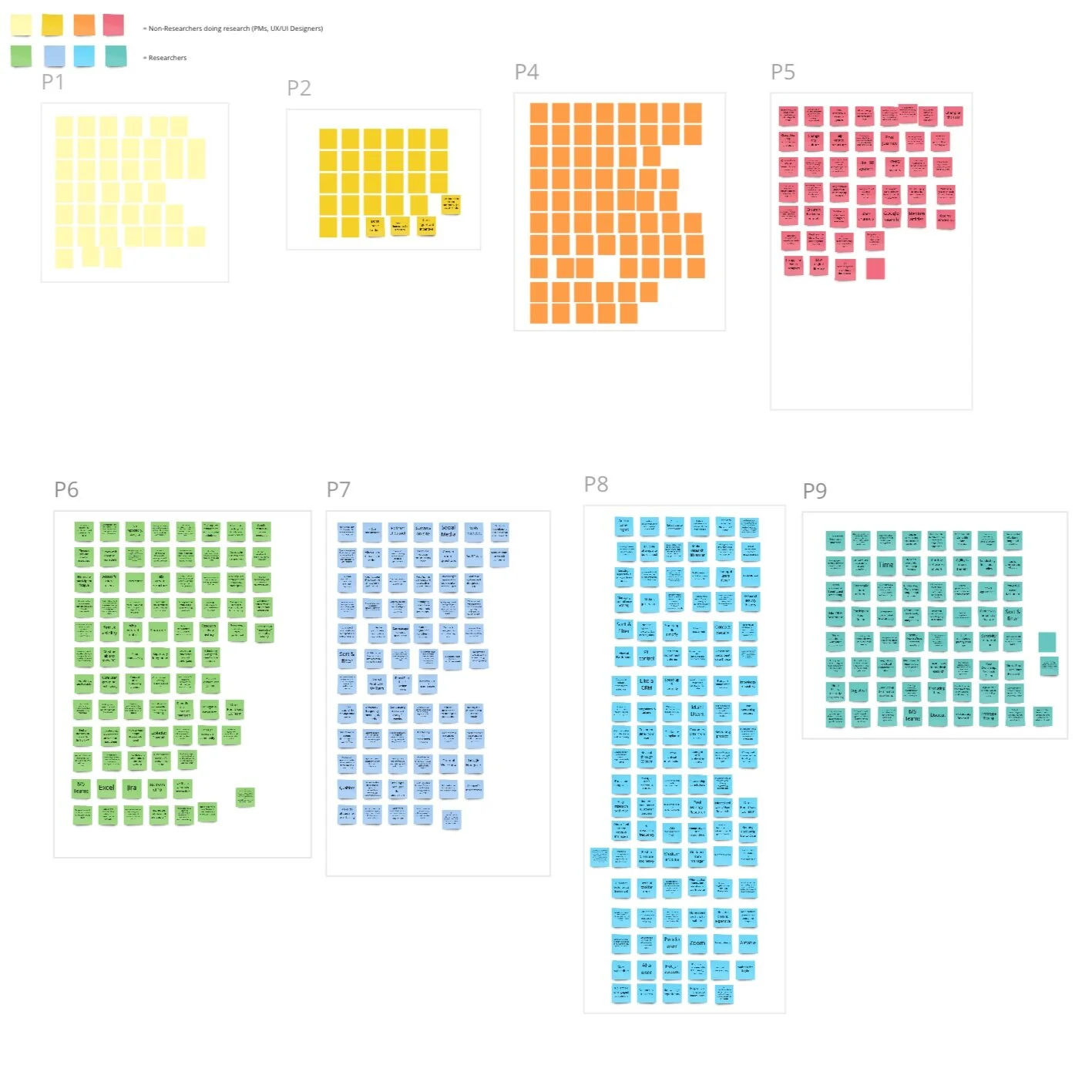

A snippet of extensive interview transcript tagging done in Dovetail.

A Clear Divide

Example Breakout pattern: Interview highlight tags from NDRs on the top vs from Researchers on the bottom

7 different analyses done in Miro

Formulating Insights

Fourteen findings/insights were uncovered and organized under four high-level themes:

Dedicated Researchers vs Non Dedicated Researchers

Complexities in Recruiting

Participant Pool Purpose, Attributes, & Functions

Bandwidth, Budget, & Buy in

Sample Insight: A “flowing stream” or network of qualified like-users (analogous) not only solves for perceived risks of PP user fatigue, bias, and diversity but is advantageous for those researchers focused on hard-to-access users.

When Risk, Want & Magic Wand Point in the Same Direction

💡 My partner and I reviewed each other’s analysis for discrepancies and substantiated findings.

Outcomes

Project deliverables included: Raw & tagged data in Dovetail, interview debriefs & consolidated findings, top-line report, five highlight reels, and presentation deck.

5x

Product Recommendations

Prioritized MVP features, design considerations, and integration and functionality roadmap guidance, as well as endorsements for enhancements to existing product.

4x

Marketing Recommendations

Four high-level marketing strategies presented in addition to segment, feature, & journey-based concepts in alignment with specific findings.

3x

Follow-up Studies

Research activities to determine target segment(s) for MVP & integrations, optimizations to existing recruitment product, and to learn more about facilitating pool populating and onboarding.

Research Reflections

Assumption Audit: Ensuring a Strong Foundation for Insights

Evaluating assumptions and hypotheses based on their potential risks and rewards helped prioritize which ones needed to be investigated through user research. By clearly communicating the validity of these assumptions and hypotheses when presenting research findings, I was able to more effectively engage stakeholders and drive action.

Silent Signals: The Importance of Omissions

During data analysis, I noticed that the NDR user segment did not express a need that other segments emphasized in both studies. This observation highlighted the importance of paying attention not only to expressed needs but also to what was omitted. Recognizing these gaps and their potential implications offered valuable insights into the distinct needs and behaviors of various user segments.

Confidence in Connections: Exploring Correlations

Correlating findings across research segments enhanced the credibility and trust in specific insights, providing a more robust foundation for data-driven decision-making.

Element of Surprise: Prep for Unexpected Insights

By conducting periodic debriefs, I reduced the element of surprise and increased receptivity among stakeholders. When presenting compelling, unexpected data, I found it effective to ask, "How open are you and your team to findings and suggestions related to [specific topic]? I ask because our research has revealed [specific data points or insights]." This approach helped gauge stakeholders' willingness to explore potentially challenging insights and fostered a more collaborative environment for discussing the findings.